There are two dangerous practices that are rampant in performance testing right now – shrinking or removing think times and extrapolation of test results. Collectively we need to work to put an end to these practices and start testing more realistically to deliver higher confidence. Let’s talk about each of these things and why they’re bad news.

Shrinking or Removing Think Times

In the real world users may spend anywhere from a few minutes on a typical website up to a few hours on a SaaS type web application. For example, perhaps the application you’re going to test needs to support 5,000 concurrent users that have an average visit length of 20 minutes. In the course of the peak hour the site is expected to serve 1 million page views.

Rather than use 5,000 virtual users to generate the load over the course of an hour, you figure you’ll just use 500 virtual users and drop your session length down to two minutes… essentially cutting everything by a factor of ten. You will use less virtual users to generate the same number of page views. Many performance testers are doing this with no idea of how it actually translates to in load on the downstream systems. Well, here is the bad news… at multiple points in the infrastructure this is going to result in, you guessed it, about ten times as much load as there should be.

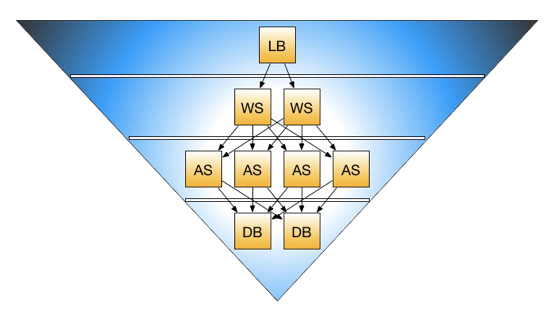

The typical 3-tier web application looks something like this:

It has a load balancer at the top, some web servers, some application servers, and a database cluster. There are typically firewalls in front of and in-between a few of the tiers. Here is a short list of some of the unnatural behaviors that can occur in the environment as a result of cheating the performance test cases:

- Too many connections to the firewalls

- Too many application server sessions

- TCP queues filling up on all servers

- Database connections piling up

Capacity models for web applications are typically built with a high-level concurrent user count that translates into some kind of click-through rate. For example, there might be 1000 users on the site at any given time, with 2% (the standard rate) clicking a button or link at any given second. This translates into a load model that will tell you things like how much memory you need for in-memory sessions, and how much CPU you need to service requests through concurrent clicks/requests.

Modern application servers such as WebSphere and JBoss have session timeout values that tell the Java virtual machine when to garbage collect the memory that was reserved for a user. This is usually somewhere between 15 and 30 minutes from the last click a user made. If all of the sessions in the load test finish in 2 minutes and start over new then memory usage is going to increase very quickly. The session creation rate will outpace the destruction rate.

By generating connections faster than normal and terminating them faster than they should, the TCP queues can fill up on the network devices causing requests to take longer to get served – or worse – running out of available sockets.

Everything gets compressed when this type of testing is being done. The ideal way to avoid this is to model your tests more accurately and use the right number of virtual users for your real-world load modeling and peak capacity testing. For some types of testing (throughput and breaking point) it makes sense to do as many transactions per second as you can but peak readiness and capacity modeling for the web application is not one of them.

Extrapolation of Results

Just as dangerous as shrinking your think times down is trying to make guesses about how many users you can support in a production environment when you tested at 1/10th the anticipated load in a scaled down lab. This is like saying that because you can run a mile right now, you can run a marathon with no problems. As engineers how can we make those types of claims? There are so many differences between the lab and production environment that not even the riskiest gambler would make a wager on that kind of math.

The performance lab is a great place for doing many types of work. Triaging performance problems, breakpoint testing and profiling code under load are among the work which can be performed in a lab. Answering questions about what your production capacity is shouldn’t be on that list. If you want to say with high confidence that you know what your capacity is and that you’ve tested for it, you need to test that load level, accurately, in production.

It seems like not a day goes by where we don’t experience poor performance or an outage on a web site we frequent. Poor performance testing practices, or a complete lack of performance testing, is much to blame for this. Let’s stop cheating our performance tests and start delivering the answers that applications leaders, and ultimately our customers, are demanding. Test for the real world scenarios in the real world environment and know with a much higher degree of accuracy what is really going to happen on peak day.

About the Author

Dan Bartow Dan Bartow is Vice President at SOASTA, the leader in load and performance testing from the cloud. Formerly he was Senior Manager of Engineering for TurboTax Online at Intuit, makers of TurboTax, Quicken and QuickBooks. During his career Dan has deployed high traffic sites for some of the web’s best known names, including AT&T Wireless, Best Buy, Neiman Marcus, Sony Online Entertainment, and many more. Some of Dan’s accomplishments include deploying the world’s largest stateful JBoss cluster and setting an industry precedent by using over 2200 cloud computing cores to generate load against a live website.

Dan is a respected leader in the software industry as well as an accomplished public speaker. He has taught numerous training courses and has spoken at well known industry conferences such as the the ATG Insight user conference, the Software Test and Performance Conference, Amazon’s AWS Road Show, and Sys-Con’s Cloud Computing Expo.

Dan lives in the SF bay area and in his spare time he can usually be found surfing, watching surf movies, listening to surf-inspired music… or… okay you get the point.

Nowadays more job opportunities are there for software development and software testing. I am searching such kind of article from a long time because it is helpful for every software tester . Many thanks for sharing this.